Steven Forth is the Co-founder & CEO of Ibbaka and a recognized expert in generative AI in pricing. With extensive experience in the intersection of artificial intelligence and pricing strategies, Steven provides valuable insights into how agents are transforming the pricing landscape.

In this episode, Steven discusses the concept of AI agents, their role in pricing, and how they can deliver value in various contexts. Together, they explore the implications of AI-driven agents on user interfaces, pricing strategies, and the future of business interactions.

Podcast: Play in new window | Download

Why you have to check out today’s podcast:

- Understand what agents are and how they differ from general-purpose AI.

- Discover how pricing strategies for agents can be structured around value delivery.

- Learn about the future of agent-to-agent interactions and their impact on pricing.

“Understanding what job your agent is going to do is critical for pricing.”

– Steven Forth

Topics Covered:

02:07 – Definition of agents and their functionality.

03:17 – Differences between agents and general-purpose AI like ChatGPT.

05:11 – User interface simplicity and complexity in agent design.

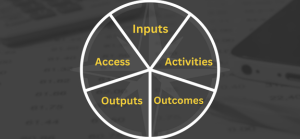

08:16 – The AI agent layer cake and its implications for pricing.

11:12 – Access and usage-based pricing for agents.

14:03 – Outcomes-based pricing and its challenges.

16:16 – Tokenization.

20:27 – The importance of understanding the job an agent performs for pricing.

24:31 – Steven’s insights on the evolving landscape of pricing strategies.

31:28 – Steven’s pricing advice around agents.

31:53 – How to connect with Steven.

Key Takeaways:

“Agents make it easier to do things, and if those things are valuable, we’re going to do them a lot more.” – Steven Forth

“Pricing agents will require a shift in how we think about value delivery.” – Steven Forth

Resources and People Mentioned:

- Jakob Nielsen: https://en.wikipedia.org/wiki/Jakob_Nielsen_(usability_consultant)

- OpenAI: https://openai.com/

- Zilliant: https://zilliant.com/

- Perplexity: https://www.perplexity.ai/

- Salesforce’s Agent Force: https://www.salesforce.com/ap/agentforce/

- Marc Benioff: https://en.wikipedia.org/wiki/Marc_Benioff

- AI Agent Layer Cake: https://www.ibbaka.com/ibbaka-market-blog/how-to-price-ai-agents

Connect with Steven Forth:

- LinkedIn: https://www.linkedin.com/in/stevenforth/

- Email: [email protected]

Connect with Mark Stiving:

- LinkedIn: https://www.linkedin.com/in/stiving/

- Email: [email protected]

Full Interview Transcript

(Note: This transcript was created with an AI transcription service. Please forgive any transcription or grammatical errors. We probably sounded better in real life.)

Steven Forth

The critical thing here is to understand what job your agent is going to do, and all the rest of the pricing will unfold from understanding that. What’s the job, what’s the value of the job? If you can understand and be really crisp on that, I think the rest of the pricing will unfold very easily.

[Intro / Ad]

Mark Stiving

Welcome to Impact Pricing, the podcast where we discuss pricing, value, and the codependent relationship between them.

I’m Mark Stiving, and I run boot camps to help companies get paid more.

Our guest today is, once again, Mr. Steven Forth.

I’ll tell you three things about Steven before we start, because I haven’t said these in a while. He is the CEO of Ibbaka. He’s a frequent guest on the podcast, and he is, in my mind, the world’s expert on AI and pricing.

So welcome, Steven.

Steven Forth

Mark, I’m glad to be back.

Mark Stiving

So I had this really brief conversation with Steven before we hit record. And what I want to do today, Steven really and truly knows so much more about AI and what’s going on. And I’m so confused by it all. When I talk to him, he’s beyond me.

So I want to ask a bunch of really simple questions. And specifically, we’re going to talk about agents.

And we’re going to talk about, how do we price agents, or how do we see agents changing the world of pricing? So let’s start with the softball, although I’m sure it’s not.

What the heck is an agent?

Steven Forth

So I think agents, which have sort of emerged out of nowhere in the last six to eight months, are really a way of packaging functionality, almost any type of functionality. And they’ve become popular because they’re a really good way to package AI.

So an agent is something that does something for you. It’s that simple.

It’s a constrained piece of functionality and it will go away and it will do something. Maybe it will book a flight for you. Maybe it will figure out your calendar for you. Maybe it will create a sales presentation for you. Maybe it will act like a sales development representative for you.

So they are very clearly packaged pieces of functionality that do a specific job.

Mark Stiving

So let’s push on that a little bit, if I can.

So let’s talk about booking a flight for me for a second. I could get onto ChatGPT and type in a prompt.

So, how is an agent different from me using ChatGPT myself to say, “Hey, what’s the best flight I need?”

Steven Forth

Yeah, and ChatGPT is a general purpose AI, and it does many things and that’s going to be part of the tension between what you can do through general purpose platforms such as a ChatGPT or perplexity or you.com or Gemini or whatever.

And what you can do more effectively through a very focused piece of software. So for example, the agent may well have behind the scenes direct connections to the booking software.

What has the same sort of AI experience up front, you know, the conversational experience up front, but behind the scenes, it has additional purpose-built integrations.

It’s probably got a, depending on the type of agent, it’s probably been trained on some very specific content.

So, you know, it gives a more focused answer than a generalized application is likely to do. So think of agents as built for purpose AIs with lots of integrations running in the background.

Mark Stiving

Okay, then let’s talk about the simplicity versus complexity of the user interface of an agent. So I went on agent AI and I built my own agent and it asked you very specific questions, right?

What market segment are you going after? What’s the URL of the product that you want to sell?

And then it goes through an AI prompt and it spits out some output the way I designed an output.

So to me, that’s really, it’s almost like if this, then that, but I bounced it across an AI.

And then I go to your website and you’ve built what you called as an agent and it looks to me like a chat box where I could type in anything I want and you respond with, I have no idea how you got to all that stuff, but you can respond with words and context.

So is there a restriction or complexity issue around this user interface for an agent?

Steven Forth

So I think in general, this is a really big question and for those who are interested in it, Jacob Nielsen, who’s sort of the guru of user interface design and customer experience design.

So Jacob Nielsen earlier this year wrote a post where he said basically that agents are going to replace UI and they are going to replace accessibility issues.

So the way that we are going to access virtually all software in the future will be through agents.

So applications like big ERP systems, like CRM systems, all of those are going to fade down into the background. They won’t go away. They’ll still be there in the background, part of the old hindbrain of the global information system.

But the way that you interact with them will be through agents. And these agents will provide very simple, easy to understand, easy to use user experiences.

Mark Stiving

Okay, so I hear those words, but I don’t picture it, right?

So today in a user interface, I might have to say, you know, for whatever it is I’m doing, I’m male, I’m 5’8″, I weigh 190, whatever the heck it is, because that’s information that has to go into the application to make good decisions.

So is an agent just going to ask me those questions?

Steven Forth

I think for that sort of information, the agent will probably already, so you will, jumping ahead a bit, you will actually have your own agent that represents you and manages information and protects information about you.

So the future of this is not going to be so much humans interacting with agents as a whole web of humans interacting with agents that interact with agents that interact with humans.

It’s going to be a far more complex web under the surface.

So the experience will be far simpler. Just like, you know, you can almost think of Google search as an early form of agent. You know, and Google search was really disciplined initially. I think they’re losing some of their discipline lately, but they were very disciplined about having, you know, a very simple screen. It pops up and it has one box that you type things into.

Compare that with some of the older search engines, which had highly complex and confusing user interfaces.

Agents are going to primarily have very, very simple interfaces that only ask you to do a few things.

And then that interface will change depending on what you respond to. And many agents will allow voice interaction, not just text interaction.

Mark Stiving

Okay, so let’s get into the topic that you and I like a lot, and that’s pricing.

And so how is it that we should be thinking about putting a price on agents?

Not creating agents to do pricing, but finding an agent and saying, oh, this is what we should charge for that service or capability.

Now, I’m a traditionalist. I think, hey, it just comes down to value. What’s the big deal?

Let’s figure out how much value we’re delivering and charge for that. I’m sure you don’t think that way.

Steven Forth

Well, I absolutely agree that it just comes down to value. Let’s figure out how much value we’re delivering and charge for that.

But I think agents are going to be delivering value in different ways.

So and in many ways, they’re actually a better target for value based pricing. because what they do is simpler and more concrete.

And this is why the whole jobs to be done approach is I think going to be really effective in helping us understand how to price agents.

So a couple of weeks ago, Ibbaka published what we call the AI Agent Layer Cake.

And it’s a layer cake because it slices the different ways that you can provide value into different layers. And at the bottom is what job is the agent doing?

Now, an agent that books a flight for you is doing a very specific job. It’s not the most high value job in most cases. An agent that diagnoses your cancer and says specifically what kind of cancer it is. That’s an extraordinarily high value agent.

So you’ll remember, what was it, a month or so ago, OpenAI said that in the future they’re going to have PhD level agents and they’re going to charge $20,000 a month for them?

Well, you know, probably depends on what the agent has its PhD in. But, you know, the sort of basic substrate I think that we’re going to use when we price agents or we’re going to begin is what role does this agent play? Then the next layer of the layer thing.

Mark Stiving

Wait, before we go, before we go, before we go, you could almost argue that the role is essentially saying what’s the type of value I’m delivering to the end output.

Steven Forth

Yeah. What job am I doing?

Mark Stiving

And so that’s, I would say, very highly correlated, if not perfectly correlated with value or value-based pricing.

Steven Forth

I completely agree. Okay. You know, I think agents are, if we’re truthful, value-based pricing is served, you know, we all talk a lot about it. There’s very little value-based pricing that actually happens.

Most of it is hand-waving, and yeah, we don’t generally price on value. For a variety of reasons, we can have a different session on that.

Mark Stiving

Yeah. We’ll, we’ll, we’ll dive into that one day.

Steven Forth

I think, you know, you know, most of the people that say they’re pricing on value are not, but that’s a separate issue. And so, yeah, I agree, you know, by, by being able to define, say, what, what job does the agent do? That gives us a really good grounding in value and it gives us a really good place to start thinking about, okay, how are we going to price this agent?

And in some cases we can look at the human job that it’s replacing or complementing, and that will give us some, some reference points, some anchors to, to think about agent pricing.

The second layer of the layer cake, those access and, and think about, you know, your relationship with a lawyer where some people pay a lawyer or retainer.

So they’ll have that lawyer available when they need them, whether they need them or not, they pay a retainer.

And paying for access in a sense, it’s a retainer. And I think it’s going to be important in situations where there’s a lot of competition for compute resources. Some agents are going to have relatively low compute requirements, but other agents are going to have very, very high compute requirements.

And you don’t want to be subject or put that risk of fluctuating compute charges.

So in some cases you’ll be able to pay a retainer or pay an access fee that will stabilize the price that you need to pay. And as the vendor, it gives you an assured stream of income.

Mark Stiving

So I could imagine, first off, it doesn’t feel like access and role are orthogonal. I’m sorry. they’re not different or independent of each other.

So for instance, I might pay for access to a doctor or to a diagnosis service, and I would pay more than I would pay for access to a travel agent service.

Steven Forth

Exactly, right. Yeah.

Mark Stiving

The other thing about access that I find interesting, you talked about limited compute resources. I could almost imagine that I want to pay for access because I want that agent to know me.

And so, I don’t want it to forget me, just keep remembering who I am.

So when I ask you a question, you remember who I am and we don’t have to go through a bunch of details again.

Steven Forth

Yeah. And we’ll come to this later, but I think part of the power of agents is that they are going to be very context driven. They’re very aware of, good agents are very aware of the context in which they’re operating.

And that’s, you know, part of the characteristic of when you’re designing an agent and you want to design agents that are context aware.

I can’t wait to hear more about that. Then the next layer of the layer cake is use. How many actions does the agent take for you?

And this maps pretty closely to usage-based pricing, but it defines usage in terms of the actions that an agent takes.

So rather than, you know, having, you know, sort of very excessively granular measures of usage, that don’t really correlate well with value. You want to figure out what actions the agent is taking, how valuable that action is, and then charge based on the number of actions taken.

And then finally, we get to the very top of the layer cake, the icing on the cake, so to speak, is outcomes-based pricing. So that is the outcome of an action. But I think we’ve discussed this before, right?

Outcome-based pricing is not for everyone. In order to really make outcome based pricing work, you have to satisfy three conditions.

So one is that you can agree on the outcome. It’s actually easier said than done in many cases.

Secondly, is that you can agree on the attribution of what led to the outcome. also easier said than done.

And finally, that you can predict outcomes to give some level of certainty to price. Now, I actually think that, you know, these are actually in order of difficulty. I actually think that agreeing on outcomes is the most difficult thing.

Attributing what led to the outcome is the second most difficult and prediction is actually the easiest thing in the world that we’re moving into. I know that’s the opposite of what many people think, but having looked at a lot of outcome based pricing designs, that’s how, you know, what my experience has been. You can get an agent today that will build you a predictive model pretty easily. You know, there’s no magic to it.

You know, Ibbaka has its own predictive models that you give it some data and in two or three minutes, it comes back to you with a pretty good predictive model that is also very specific about how confident it is in its predictions. And you know, we’re a tiny company of, you know, 10-12 people.

Anyone can do this today.

Mark Stiving

Yeah. Okay, so here’s what’s interesting about your layer cake.

By the way, first off, I love the cake. It gives you a way to think, hey, where are the different ways that I could see the value in what we’re delivering as an agent or receiving as the buyer of an agent?

So I think that’s awesome.

But I’m actually shocked that the word token isn’t in here anywhere. Now, I’ve probably slammed the word token a bunch of times saying, hey, that’s just cost plus pricing.

But it turns out that it’s such a popular phrase in AI or such a popular technique in AI. Why have you taken that out or not included it?

Steven Forth

Well, it’s exactly what you said. Tokens are measures of compute. Well, it’s a bit simplistic.

I mean, part of the process of building one of these large language models is tokenization.

So you take whatever content it is you’re working with and you turn it into a stream of tokens.

And tokenization plus embeddings are foundational to large language models and transformer architectures.

But they really are a measure of cost, not a value.

Interesting thing that’s happened since the reasoning AIs were introduced is that OpenAI anyway, actually has three different types of tokens it charges you for.

It has the input token. Okay, fine.

It has the output token and OpenAI is interesting in that it charges less for input tokens than it does for output tokens.

Now at Ibbaka, I am thankful for that because Ibbaka tends to have a lot of input tokens and relatively few output tokens. Please don’t change that pricing.

But the other thing that they introduced is reasoning tokens.

So if you look at the way that these AI reasoning models work is that they generate a lot of tokens in the process of doing the reasoning. And they charge you for those tokens.

And OpenAI in its wisdom, remember that output tokens are charged more than input tokens. So it charges reasoning tokens as output tokens.

And, you know, which leads to the really interesting question, you know, for any, let’s call it an act that an agent is going to take, how many tokens are being consumed?

So we have instrumentation in our own AIs to track that. And it turns out that, you know, about 50 to 70% of the tokens consumed and paid for are reasoning tokens.

So, it’s this hidden, harder to manage cost that AI systems are, you know, force us to incur.

But I mean, for them, it’s good because it’s a measure of compute and compute is their major variable cost.

So it makes good sense for them.

Mark Stiving

What was pretty funny is when we just talked about outcomes, one of the key factors was the predictability. of the future.

And so, I don’t know about you, you probably do have the ability, but I have no idea how many tokens I use when I do a prompt.

Steven Forth

Yeah. So it’s and that’s in part because you, you primarily use chat GPT, I think, right?

Mark Stiving

So my strategy is I use ChatGPT for everything pricing related, and I use Perplexity for everything personal related.

Steven Forth

Okay. I’m almost the exact opposite.

But yeah, so because we’re paying per monthly fees for our personal use, we’re not sensitive to the number of tokens we’re using. and we’re not being charged for rather than charges for use of extra tokens, they tend to throttle our usage.

So if the system thinks you’re using too many tokens, it’ll slow you down. And unfortunately, in some cases, it just sort of craps out on you.

So they are throttling token usage through mechanisms other than price. But when you’re using API access, in building an actual AI system or an AI agent, you are paying per token.

So understanding, you know, token use is a big deal. And, you know, we are going to see a whole raft of applications and probably agents that will go in and optimize prompts to minimize token use.

Mark Stiving

Okay, so without looking too far into the future, which I know you’re able to do, what I would really like to know is we have talked in the past about agents interacting with agents.

Now, I don’t want the agent interacting with agent interacting with agent, you know, the humans are nowhere near the loop anymore.

But what I do want to know is in a really simplistic way, How do we price an agent when it talks to another agent?

Steven Forth

I don’t think, you know, here I’m on your side, Mark. I think the principles remain the same.

You know, what role is the agent performing? How assured are we with access? And access can not just be access, but it can also be speed of response.

How many times is the agent performing the task? And how valuable is the successful completion of the task? It doesn’t really matter if the agent’s interacting with another agent or interacting with a human. And sometimes we probably won’t even know.

So I don’t think it impacts pricing at that level. But I think what will happen is that there are going to be a lot more interactions.

So I need to take a really concrete example. So Ibbaka, my company has developed a way to generate value models using artificial intelligence.

And it works pretty well. It’s pretty solid. We were able to do this because we have data on lots of value models.

We have some fairly sophisticated mathematical engineering capabilities on the backend. and we can provide very rich context.

So we have this functionality. How should we take it to market?

And remember that today Ibbaka is, our pricing metric is number of models.

So Ibbaka has, you know, one thing we could do is we could just inject this functionality into our current platform and, you know, price it as additional functionality.

Okay. And actually, until about four months ago, that’s what we were planning to do. But another way that we could take this to market is we could bundle it as an agent and say, here’s an agent. Go ahead and generate a value model.

And if you want to use it in Ibbaka, there’s going to be an extra charge for that. And you’re going to have to have a subscription to Ibbaka if you want to use it with ecosystems. That’s fine too, because we output it as JSON and ecosystems can upload it into ecosystems.

If you want to use it, let’s say that Zilliont eventually gets around having value models as a construct in their systems, could happen. You can export it to Zilliont. I want people making as many value models as possible and using them in ways that I can’t even imagine. So we have changed course and we are going to price that as an agent.

So having this as an agent, means that the number of value models that Ibbaka creates in a year, which was, let’s say in 2024.

So without this AI, we probably would have created maybe 50 or 60 value models in 2024. We actually had the AI in the backend of 2024. So we probably created 150 or so. In 2025, I expect we’re probably going to create over a thousand.

And in 2026, I think the number will probably be more like 100,000. Now, obviously we’re going to be charging less for them.

But overall, this is going to have a huge driver for our revenue. So we have to completely rethink our pricing because agents make it easier to do things.

And if those things are valuable, we’re going to do them a lot more. Hypothesis anyway.

Mark Stiving

Yep. No, no, I think that’s perfectly fair. And so the thing that we haven’t talked about yet when we think about pricing agents, and I know you and I talked about this on a podcast before, and that’s competition.

So today, when you hand-build a model, it’s really hard to do. People have no idea how to do this, and it takes a lot of expertise.

And it’s wonderful that you can – I’m going to say the word automate, and I apologize if that offends you, but you can automate this expertise. But as soon as you start doing that, then other companies are going to say, oh, look at how much money Ibbaka’s making. I’m going to automate building value models, and I’m going to charge less than Ibbaka.

And so we end up driving prices down. And I think that’s where your price comes down. It’s not the fact that you’re going to do 100,000 of them, because they’re valuable, right? Who wouldn’t pay for it?

Steven Forth

No, I am, I hope what you’re describing happens that there will be a small set, five, six, seven or so agents out there in three years, two or three years that are able to generate value models.

And we compete amongst each other with the quality of the value model because value models come in very different qualities.

You know, we compete in the smart ways we can use value models. And, you know, eventually value models will become, you know, just part of the background information infrastructure. There will be millions of them around. Some of them will be actively used.

Some of them will be updated frequently. Some of them will be updated based on the context that’s driving the use. You know, it’ll be a very different environment from what we have today. where most people, first of all, few companies have a value model. Most of the companies that do have a value model have a value model that is out of date. And context aware is not context driven. So this is going to totally transform, I think, how things get priced.

Mark Stiving

Okay, so let’s go back. I’m going to ask my original question again, or actually, I’m going to answer my own original question now.

And that is, Zilliant creates a software package that says, not only am I going to tell you what price you should ask for, but I’m going to give you the value model that you need in order to justify the price. And to get the value model, it goes over to Ibbaka’s value model generator. It says, hey, Ibbaka, what’s the value for this customer and this product and this situation?

And you spit out the value model and say, here’s what they should be thinking about and looking at and talking about.

And so now the question is, Zilliant is paying Ibbaka. for access to the value model. And nobody actually got in the middle of that. It was just one agent talking to another. I’ll say the word agent. It could have been AI, software application, whatever you want to call that.

Steven Forth

Yeah. So a few years ago, when doing pricing seminars, I would go through the list and say, OK, who here is doing B2B? Who here is doing B2C? Who here is doing B2G? And then I would ask who here is doing M2M? Machine to machine.

Now, you know, there actually is quite a bit of machine to machine pricing that has taken place for a long time, right? A lot of stock trades are machine to machine.

A lot of energy trading is machine to machine. A lot of foreign FX is machine to machine, foreign exchange. I think we’re going to see the word machine to machine replaced by A2A, agent to agent.

And there are going to be a lot of agent to agent transactions and the billing systems are going to have to evolve so that they’re able to support those A2A transactions.

And then, you know, one question is, do I care if I am buying from an agent or buying from a human? Shouldn’t.

So, you know, it’s going to be a very, very different world that we have to act in as pricers in a few years.

Remember the transition, remember, you know, back in, what was it, 2000 or 1999 or somewhere around there when, you know, Marc Benioff and Salesforce had their no software positioning. and we transitioned a large chunk of enterprise software from being on-premise to in the cloud and from being one-time licenses to subscription licenses, you’ve written a book about this.

So that was a big change, right? And it’s a change that’s taken, what, 20, 25 years to percolate through the industry. This shift to an agent economy, so instead of a subscription economy, an agent economy, I think is going to have equally important ramifications, except it’s going to happen a lot faster.

So rather than taking 20 years to play out, I think it’s going to play out over the next five years.

Mark Stiving

So Steven, we’re going to wrap this up, but I want to say it took me 15 years to figure out why SaaS mattered and was important.

And so, I’m hoping it doesn’t take 15 years for me to figure out why agents are important.

How long did it take you? When did you know SaaS was actually going to change the world?

Steven Forth

I, well, in my case for SaaS, it was quite early on because of the business I was running.

So back in that period I was running, but at that time was a middleware company for learning systems.

And it, yeah, and it was cloud-based and subscription driven.

And it enabled subscription-based pricing, subscription-based transactions for other learning systems. So quite early on.

For agents, I think, you know, it really, I’d been thinking and talking about agents for a long time because I’m interested in autonomous systems, which are a form of agent.

But like many people, what really caught my attention was Salesforce’s agent, you know, agent force announcement back in October of last year. Then it was, you know, which really, I think, attracted a lot of attention. And then when Jacob Nielsen, who I have a huge amount of respect for and who I’ve learned an enormous amount from reading his books and following his podcasts, when Jacob Nielsen came out and said, basically, agents are going to be the primary way that we use our AIs and the primary mode of interaction. I thought, OK, this is going to transform how we think about pricing in ways that are at least as big and probably bigger than the shift to the subscription economy.

Mark Stiving

Nice. Well, as always, you’re way ahead of me and I just hope to keep learning from you.

Steven Forth

Well, I hope to learn from PricingGPT.

And one of my little projects over the next couple of weeks is, you know, there are a growing number of these little pricing advisory agents, I’m going to call them agents, out there.

And I’m going to come up with a set of reference questions and run them against every little pricing advisory agent I can find and compare them.

Mark Stiving

Can I have the set of questions so I can go tweak my PricingGPT?

Steven Forth

I don’t know. I think that might be cheating.

Mark Stiving

I wouldn’t even know how to tweak it.

Steven Forth

I’ve invited people to suggest questions on LinkedIn.

So Mark, if you want to go and suggest the questions that you think you’re going to shine on.

Mark Stiving

Absolutely. Steven, this has been fun as always. I am going to ask the final question.

Do you have a piece of pricing advice around agents that you would give our listeners that you think could have a big impact on their business?

Steven Forth

Yeah, I think the critical thing here is to understand what job your agent is going to do, and all the rest of the pricing will unfold from understanding that. What’s the job, what’s the value of the job? If you can understand and be really crisp on that, I think the rest of the pricing will unfold very easily.

Mark Stiving

Awesome. Thank you. And thank you so much for your time today. If anybody wants to contact you, how can they do that?

Steven Forth

I think the best way is either a direct email, Steven, S-T-E-V-E-N at Ibbaka.com or reach out to me on LinkedIn. I’m Steven Forth. I’m easy to find and quite active.

Mark Stiving

Quite prolific, yes. To our listeners, thank you for your time. If you enjoyed this, would you please leave us a rating and a review? I’m actually going to go review this one. I thought it was awesome. And finally, if you have any questions or comments about the podcast, or if your company wants to get paid more for the value you deliver, email me, [email protected]. Now, go make an impact.

[Ad / Outro]